Hybrid MPI and OpenMP

1. MPI, OpenMP two complementary parallelization models.

-

MPI is a multi-process model whose mode of communication between the processes is explicit (communication management is the responsibility of the user). MPI is generally used on multiprocessor machines with distributed memory. MPI is a library for passing messages between processes without sharing.

-

OpenMP is a multitasking model whose mode of communication between tasks is implicit (the management of communications is the responsibility of the compiler). OpenMP is used on shared-memory multiprocessor machines. It focuses on shared memory paradigms. It is a language extension for expressing data-parallel operations (usually parallelized arrays over loops).

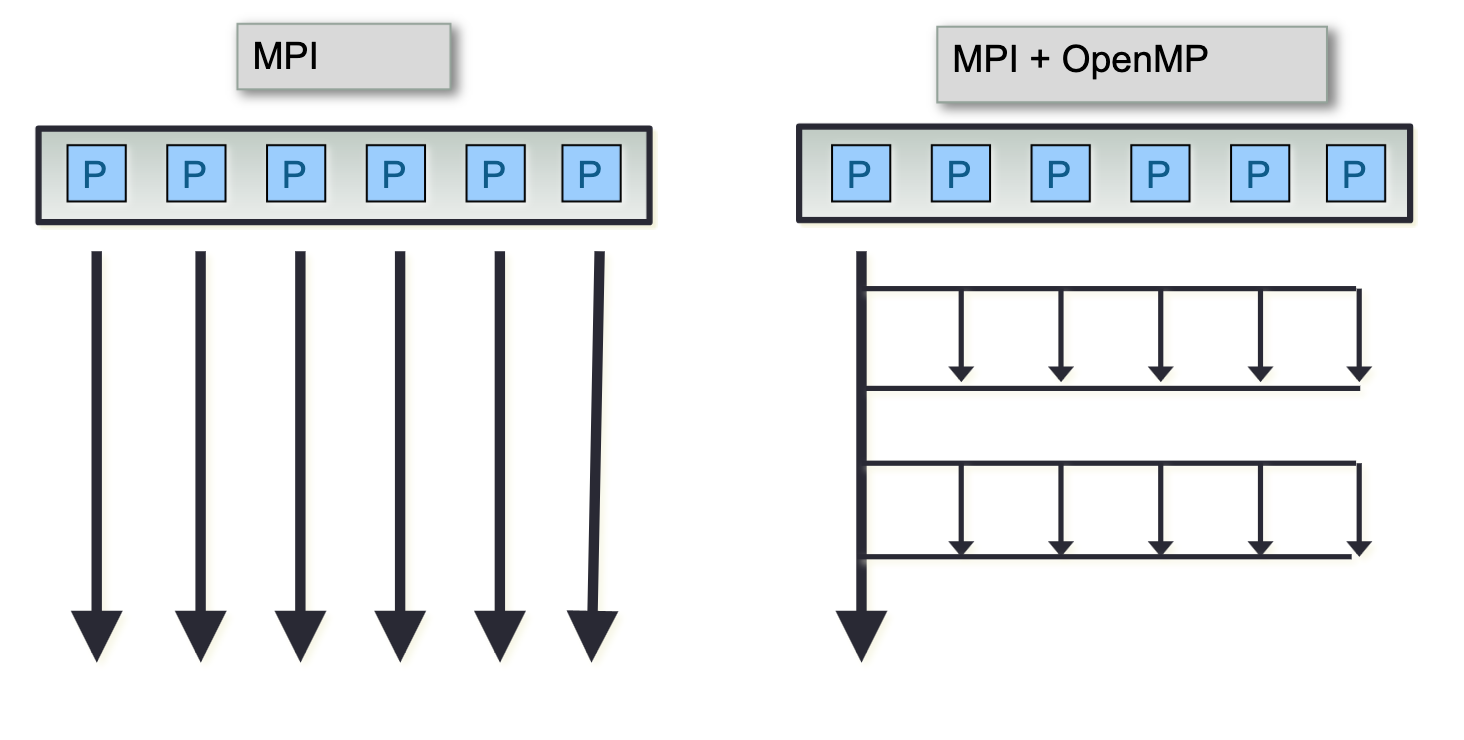

Note: on a cluster of independent shared-memory multiprocessor machines (nodes), the implementation of a two-level parallelization (MPI, OpenMP) in the same program can be a major advantage for the parallel performance of the code.

2. MPI vs OpenMP comparison

MPI vs. OpenMP |

|

MPI pos |

OpenMP pos |

Portable to a distributed and shared memory machine. Scale beyond a node No data placement issues |

Easy to implement parallelism Implicit communications Low latency, high bandwidth Dynamic Load Balancing |

MPI negative |

OpenMP negative |

Explicit communication High latency, low bandwidth Difficult load balancing |

Only on nodes or shared memory machines Scale on Node Data placement problem |

Hybrid application programs using MPI + OpenMP are now commonplace on large HPC systems. There are basically two main motivations for this combination of programming models:

1. Reduced memory footprint, both in the application and in the MPI library (eg communication buffers).

2. Improved performance, especially at high core counts where pure MPI scalability runs out.

3. A common hybrid approach

-

From dequential code, alongside MPI first, then try adding OpenMP

-

From MPI code, add OpenMP

-

From OpenMP code, treat as serial code

-

The simplest and least error-prone method is to use MPI outside the parallel region and allow only the master thread to communicate between MPI tasks.

-

Could use MPI in parallel region with thread-safe MPI.

.pdf

.pdf