In many applications today, software must make decisions quickly. And the best way to do so is parallel programming in C / C ++ and Multithreading (multithread programming). Parallel programming is a programming method which allows you to execute several calculations or processes simultaneously. It is used to improve the performance of applications by using multi-core architectures and distributed systems. Parallel programming consists in breaking down a problem into sub-problublicms which can be solved simultaneously by several calculation units. This reduces the overall execution time of a program by effectively using available hardware resources. Parallel machines offer a wonderful opportunity for applications of large calculation requirements. Effective use of these machines, however, requires an in -depth understanding of their operation.

Let’s see more about what computing and programming parallel…

1. What is Parallel Computing ?

In the field of computing, the evolution of technologies has led to significant advances in the way we approach complex problems. Among these advances, parallel computing stands out as a revolutionary approach, offering a powerful alternative to traditional serial computing. To fully understand this concept, it is essential to compare these two computing methods and explore their fundamental characteristics. So, let’s first look at serial computing and then dive into the fascinating world of parallel computing

Serial Computing : Traditionally, software has been developed with a focus on serial computation. In this conventional framework, a problem is decomposed into a discrete series of instructions that are executed sequentially, one after the other, on a single processor. This approach inherently limits the system’s efficiency, as only one instruction can be processed at any given moment.

Parallel Computing : In contrast, parallel computing represents a significant advancement in computational methodology. At its core, parallel computing involves the simultaneous utilization of multiple computational resources to address complex problems. In this paradigm, a problem is divided into discrete components that can be solved concurrently. Each component is further segmented into a series of instructions, which are executed simultaneously across different processors. This necessitates the implementation of an overarching control and coordination mechanism to ensure the effective integration of results.

2. Overview of the different hardware architectures

In modern computing, various hardware architectures have been developed to address specific computational needs. Each architecture is uniquely designed to optimize performance for particular tasks, ranging from general-purpose processing to specialized operations in graphics, machine learning, and natural language processing. This section explores the key architectures—CPU, GPU, GPGPU, TPU, NPU, and LPU—highlighting their distinct roles and applications in the evolving landscape of technology.

2. 1 CPU, GPU, GPGPU Architecture

-

CPU, GPU, and GPGPU architectures are all types of computer processing architectures, but they differ in their design and operation.

-

CPU: A central processor (CPU) is a processing unit that is designed to perform various computing tasks including data processing, mathematical and logical calculations, communication between different components of a computer system, etc. Modern CPUs usually have multiple cores to process multiple tasks simultaneously.

-

GPU: A graphics processing unit (GPU) is an architecture designed to accelerate the processing of images and graphics. GPUs have thousands of cores that allow them to process millions of pixels simultaneously, making them an ideal choice for video games, 3D modeling, and other graphics-intensive applications.

-

GPGPU: A General Processing Architecture (GPGPU) is a type of GPU that is designed to be used for purposes other than graphics processing. GPGPUs are used to perform computations of an intensive nature using the hundreds or thousands of cores available on the graphics card. They are particularly effective for parallel computing, machine learning, and other computationally intensive areas.

In conclusion, the main difference between the three architectures CPU, GPU and GPGPU lies in their design and operation. While CPUs are designed for general computer processing, GPUs are designed for specialized graphics processing, and GPGPUs are a modified version of GPUs intended to be used for specialized computer processing other than graphics processing.

2.2 TPU, NPU, LPU , DPU Architecture

-

TPU: A Tensor Processing Unit (TPU) is a specialized hardware processor developed by Google to accelerate machine learning. Unlike traditional CPUs or GPUs, TPUs are specifically designed to handle tensor operations, which account for most of the computations in deep learning models. This makes them incredibly efficient at those tasks and provides an enormous speedup compared to CPUs and GPUs. In this article, we’ll explore what a TPU is, how it works, and why they are so beneficial for machine learning applications.

-

NPU: A Neural Processing Unit (NPU), is a specialized hardware accelerator designed for executing artificial neural network tasks efficiently and with high throughput. NPUs deliver high performance while minimizing power consumption, making them suitable for mobile devices, edge computing, and other energy-sensitive applications. NPUs use the traditional von Neumann architecture, which separates the memory and the processing units. TPUs use the systolic array architecture, which integrates the memory and the processing units into a single chip. NPUs have a higher peak performance than TPUs, but they also have a higher latency and power consumption. TPUs have a lower peak performance than NPUs, but they also have a lower latency and power consumption.

-

LPU: Language Processing Units (LPUs) are a relatively new addition, designed specifically for handling the complexities of natural language processing tasks. While CPUs, GPUs, and TPUs play significant roles in the broader field of AI, LPUs offer optimized performance for generative models that deal with text, such as GPT (Generative Pre-trained Transformer). They’re good at these tasks and might be more efficient than Graphics Processing Units (GPUs). GPUs are still great for things like graphics and AI.The true power of generative AI comes from the interplay and integration of these processing units. CPUs handle the overarching control and coordination, GPUs accelerate the bulk of computational workloads, TPUs offer specialized efficiency for deep learning, and LPUs bring a new level of performance to natural language processing. Together, they form the backbone of generative AI systems, enabling the rapid development and deployment of models that can create highly realistic and complex outputs.

-

DPU: A Data Processing Unit (DPU) is a specialized processor designed to optimize data-centric workloads in modern computing environments. It combines a multi-core CPU, hardware accelerators, and high-speed networking capabilities into a single system-on-chip (SoC). DPUs are primarily used to offload networking, security, and storage functions from the main CPU, allowing it to focus on running operating systems and applications. This new class of programmable processors is becoming increasingly important in data centers and cloud computing, where they help improve overall system efficiency and performance. DPUs can handle tasks such as packet processing, encryption, and data compression, effectively becoming a third pillar of computing alongside CPUs and GPUs. As data-intensive applications continue to grow, DPUs are expected to play a crucial role in optimizing data movement and processing in large-scale computing environments.

-

QPUs: Quantum processing units (QPUs) process information by using qubits instead of binary bits and are designed to perform complex quantum algorithms. QPUs are best used for certain kinds of highly complicated problems, and many of today’s promising quantum algorithms provide probabilistic solutions instead of precise answers.

3. Why Use Parallel Computing ?

Parallel computing is essential for modeling complex real-world phenomena that involve multiple simultaneous events. It utilizes data and task parallelism through shared or distributed memory models. Key benefits include improved performance and scalability, allowing for faster execution times and efficient adaptation to more powerful systems. While parallel computing presents challenges in development and debugging, it remains crucial for solving complex computational problems across various scientific and technological domains. Its ability to handle intricate simulations and process large datasets makes it an indispensable tool in modern computing.

Main Reasons for Employing Parallel Programming :

-

Time and Cost Efficiency: Theoretically, allocating additional resources to a task can reduce its completion time, leading to potential cost savings. Furthermore, parallel computers can be constructed using inexpensive commodity components.

-

Solving Large or Complex Problems: Many problems are so vast or intricate that solving them with a serial program is impractical or impossible, particularly when accounting for limited computer memory.

-

Concurrency: A single computational resource is limited to executing one task at a time. In contrast, multiple compute resources can perform numerous tasks simultaneously. For example, collaborative networks provide a global platform for individuals from around the world to meet and work together virtually.

-

Utilizing Non-local Resources: Parallel computing allows for the use of computational resources across wide area networks or even the Internet when local resources are insufficient.

-

Maximizing Hardware Efficiency: Modern computers, including laptops, are inherently parallel in architecture with multiple processors and cores. Parallel software is specifically designed to exploit this architecture effectively. In many cases, traditional serial programs fail to utilize the full potential of modern computing power.

4. Kokkos: A Modern Solution for Portable Parallel Programming

Parallel programming has become essential to fully exploit the capabilities of modern hardware architectures. These architectures include different types of specialized processors, each designed for specific tasks:

-

CPU (Central Processing Unit) is the traditional general-purpose processor, capable of performing a wide variety of tasks but with a limited number of cores.

-

GPU (Graphics Processing Unit) is optimized for massive parallel processing, particularly efficient for graphics computations and certain types of algorithms.

-

TPU (Tensor Processing Unit) is designed specifically for machine learning and artificial intelligence operations.

-

NPU (Neural Processing Unit) is similar to the TPU, but usually integrated into mobile devices for local AI tasks.

In this context of hardware diversity, Kokkos emerges as a powerful solution for portable parallel programming. Kokkos is a C++ library that allows developers to write high-performance parallel code that can run efficiently on various hardware architectures, including multi-core CPUs and GPUs. This library provides a hardware abstraction that allows expressing parallel algorithms in a way that is independent of the underlying architecture, while automatically optimizing performance for each specific platform. Kokkos thus greatly simplifies the process of developing portable and high-performance parallel applications, by allowing developers to focus on the algorithm rather than on the implementation details specific to each architecture. I therefore invite you to consult the Kokkos section after studying the basics of parallel programming.

5. Who Is Using Parallel Computing?

-

Science and Engineering

-

Historically, parallel computing has been considered to be "the high end of computing," and has been used to model difficult problems in many areas of science and engineering:

-

Atmosphere, Earth, Environment

-

Physics - applied, nuclear, particle, condensed matter, high pressure, fusion, photonics

-

Bioscience, Biotechnology, Genetics

-

Chemistry, Molecular Sciences

-

Geology, Seismology

-

Mechanical Engineering - from prosthetics to spacecraft

-

Electrical Engineering, Circuit Design, Microelectronics

-

Computer Science, Mathematics

-

Defense, Weapons

-

-

-

Industrial and Commercial

-

Today, commercial applications provide an equal or greater driving force in the development of faster computers. These applications require the processing of large amounts of data in sophisticated ways. For example:

-

"Big Data," databases, data mining

-

Artificial Intelligence (AI)

-

Oil exploration

-

Web search engines, web based business services

-

Medical imaging and diagnosis

-

Pharmaceutical design

-

Financial and economic modeling

-

Management of national and multi-national corporations

-

Advanced graphics and virtual reality, particularly in the entertainment industry

-

Networked video and multi-media technologies

-

Collaborative work environments

-

-

-

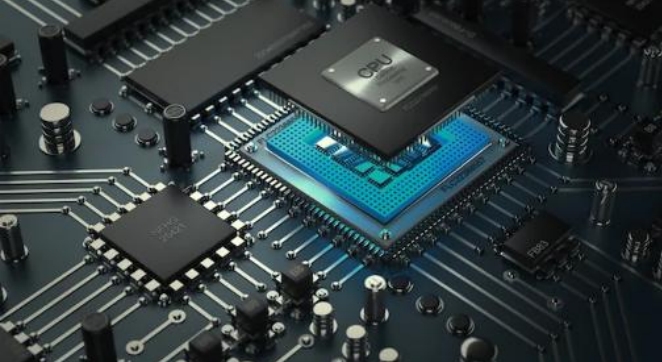

Computer Hardware (CPUs, GPUs, and Memory)

-

CPU-chip – CPU stands for Central Processing Unit. This is the computer’s main processing unit; you can think of it as the 'brain' of the computer. This is the piece of hardware that performs calculations, moves data around, has access to the memory, etc. In systems such as Princeton’s High Performance Computing clusters, CPU-chips are made of multiple CPU-cores.

-

CPU-core – A microprocessing unit on a CPU-chip. Each CPU-core can execute an independent set of instructions from the computer.

-

GPU –GPU stands for the Graphics Processing Unit. Originally intended to process graphics, in the context of parallel programming this unit can do a large number of simple arithmetic computations.

-

MEMORY – In this guide memory refers to Random-Access Memory, or RAM. The RAM unit stores the data that the CPU is actively working on.

-

-

Additional Parallelism Terminology

-

An understanding of threads and processes is also useful when discussing parallel programming concepts.

-

If you consider the code you need to run as one big job, to run that code in parallel you’ll want to divide that one big job into several, smaller tasks that can be run at the same time. This is the general idea behind parallel programming.

-

When tasks are run as threads, the tasks all share direct access to a common region of memory. The mulitple threads are considered to belong to one process.

-

When tasks run as distinct processes, each process gets its own individual region of memory–even if run on the same computer.

-

To put it even more simply, processes have their own memory, while threads belong to a process and share memory with all of the other threads belonging to that process.

-

.pdf

.pdf