Reinforcement learning for micro-swimmers

In this example, we extend reinforcement learning to multiple sphere swimmers (\(N\geq 4\)). The three sphere swimmer was studied in details Q-learning_3SS.

1. \(N\)-sphere swimmers

We consider the \(N\)-sphere swimmers that consist of \(N\) aligned spheres of equal radii \(R\) linked by \(N-1\) arms of length \(L\). Unlike the three sphere swimmer, for \(N\geq 4\) swimmers have multiple strategies of swimming. The optimal swimming strategy of such swimmers was shown to be the traveling wave pattern. Using reinforcement learning, we want these swimmers to self-learn the optimal strategy of swimming .

2. Reinforcement learning : \(Q\)-learning algorithm

The Q-learning algorithm and the \(\epsilon\)-greedy scheme were discussedi in section 2 of Q-learning_3SS.

3. Rienforcement learning for \(N\)-sphere swimmers

3.1. States, actions and reward

Here, the agent is an \(N\)-sphere swimmer that consists of \(N\)- spheres linked by \(N-1\) arms. The spheres radii are fixed to \(R=1\) and each arm has a length \(L=10R\) and can be retracted by a length \(\varepsilon = 4R\).

The total number of configurations can an \(N\)-sphere swimmer have is \(2^{N-1}\). The swimmer can transition from a configuration to another by retrating or extending one of its arms. This transition results in a net displacement that provides a certain reward of taking such action in a certain configuration.

We choose the swimmer to be parallel to the \(x\) axis so that the net displacement will only be with respect to the \(x\) axis.

The states, actions, and the reward are defined as follow :

-

state : configuration of the \(N\)-sphere swimmer that is caracterized by the length of each arm of the swimmer.

-

action : retracting or extending one of the swimmer’s arms

-

reward : the \(x\)-net displacement gained after performing an action in a certain state.

4. Data files

The data files for the three sphere swimmer are available in Github here [Add the link]

5. Inpute parameters for \(Q\)-learning

For all \(N\)-sphere swimmers, we use the following parameters :

Parameter |

Description |

values |

\(\alpha\) |

Learning rate |

1 |

\(\gamma\) |

Discount factor |

0.99 |

\(\epsilon\) |

\(\epsilon\)-greedy parameter |

0.1 |

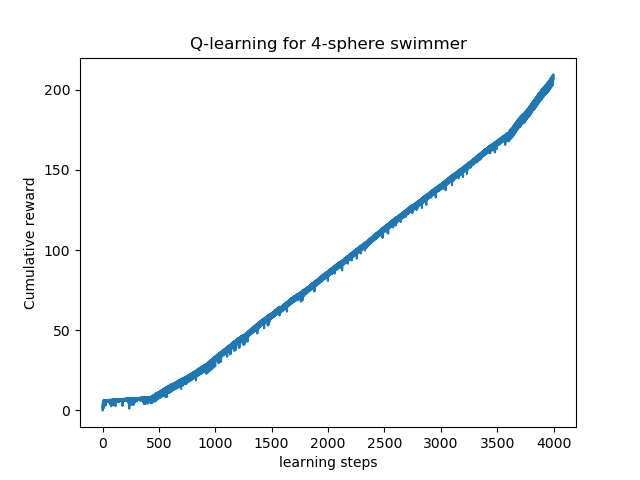

6. Self-learn the four sphere swimmer

Now we show the learning process of the four sphere swimmer using Q-learning algorithm

The swimmer learns several swimming strategies and changed the strategy once it finds a better one. Finally, it learns the optimal strategy (The traveling wave) after sufficient knowledge (\(N=3613\)).

Here are the states of the swimmer in the last sixteen steps of learning. Note that the swimmer state is referred by a list describing the state of each arm with \(True\) or \(False\). \(True\) means the arm is long while \(False\) means it is short.

For N = 3984 the swimmer state is [True, True, True] For N = 3985 the swimmer state is [False, True, True] For N = 3986 the swimmer state is [False, False, True] For N = 3987 the swimmer state is [False, False, False] For N = 3988 the swimmer state is [True, False, False] For N = 3989 the swimmer state is [True, True, False] For N = 3990 the swimmer state is [True, True, True] For N = 3991 the swimmer state is [False, True, True] For N = 3992 the swimmer state is [False, False, True] For N = 3993 the swimmer state is [False, False, False] For N = 3994 the swimmer state is [True, False, False] For N = 3995 the swimmer state is [True, True, False] For N = 3996 the swimmer state is [True, True, True] For N = 3997 the swimmer state is [False, True, True] For N = 3998 the swimmer state is [False, False, True] For N = 3999 the swimmer state is [False, False, False]

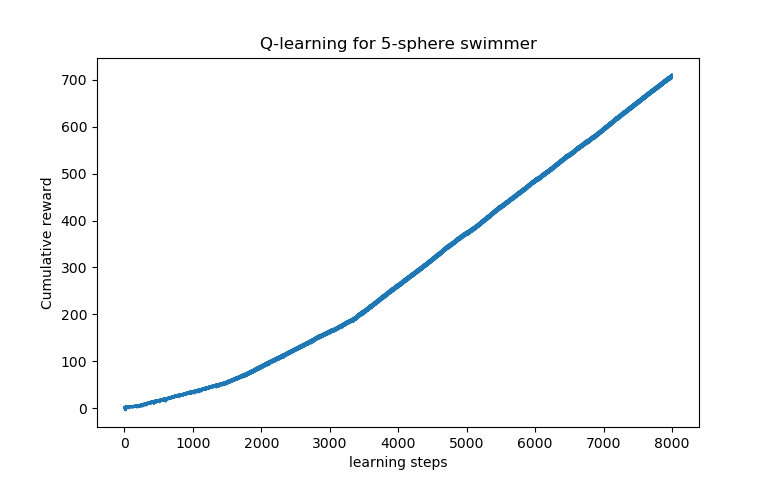

7. Self-learn the five sphere swimmer

Here, we present the learning process of the five sphere swimmer

The five sphere swimmer has multiple swimming strategies, which needs more learning steps than the Four or three-sphere swimmer. Hence, the swimmer learns the optimal policy as illustrated below.

Here, we present the state of the swimmer at the last twelve learning steps, which proves that the swimmer has learned the optimal strategy.

For N = 7988 the swimmer state is [True, True, True, True] For N = 7989 the swimmer state is [False, True, True, True] For N = 7990 the swimmer state is [False, False, True, True] For N = 7991 the swimmer state is [False, False, False, True] For N = 7992 the swimmer state is [False, False, False, False] For N = 7993 the swimmer state is [True, False, False, False] For N = 7994 the swimmer state is [True, True, False, False] For N = 7995 the swimmer state is [True, True, True, False] For N = 7996 the swimmer state is [True, True, True, True] For N = 7997 the swimmer state is [False, True, True, True] For N = 7998 the swimmer state is [False, False, True, True] For N = 7999 the swimmer state is [False, False, False, True]

References on Learning Methods

-

[gbcc_epje_2017] K. Gustavsson, L. Biferale, A. Celani, S. Colabrese Finding Efficient Swimming Strategies in a Three Dimensional Chaotic Flow by Reinforcement Learning Published on Eur. Phys. J. E (December 14, 2017) 10.1140/epje/i2017-11602-9 Download PDF

-

[Q-learning] Tsang, A.C., Tong, P., Nallan, S., Pak, O.S. (2018). Self-learning how to swim at low Reynolds number. arXiv: Fluid Dynamics.Download PDF

References on Swimming

-

[Najafi_2004] Ali Najafi, Ramin Golestanian. Simple swimmer at low Reynolds number: Three linked spheres. 2004. American Physical Society. doi.org/10.1103/PhysRevE.69.062901 linkDownload PDF

-

[nature] Jikeli, J., Alvarez, L., Friedrich, B. et al. Sperm navigation along helical paths in 3D chemoattractant landscapes. Nat Commun 6, 7985 (2015). doi.org/10.1038/ncomms8985 Download PDF

-

[bgp_cemracs_2019] Luca Berti, Laetitia Giraldi, Christophe Prud’Homme. Swimming at Low Reynolds Number. ESAIM: Proceedings and Surveys, EDP Sciences, 2019, pp.1 - 10, doi.org/10.1051/proc/202067004 Download PDF

-

[bcgp_three_spheres_2020] Luca Berti, Vincent Chabannes, Laetitia Giraldi, Christophe Prud’Homme. Modeling and finite element simulation of multi-sphere swimmers. 2020. hal-mines-paristech.archives-ouvertes.fr/ENSMP_CEMEF/hal-03023318v1 Download PDF

-

[gbcc_epje_2017] K. Gustavsson, L. Biferale, A. Celani, S. Colabrese Finding Efficient Swimming Strategies in a Three Dimensional Chaotic Flow by Reinforcement Learning Published on Eur. Phys. J. E (December 14, 2017) 10.1140/epje/i2017-11602-9 Download PDF

-

[purcell_1977] E.M. Purcell. Life at Low Reynolds Number, American Journal of Physics vol 45, p. 3-11 (1977). doi.org/10.1119/1.10903 Download PDF

.pdf

.pdf